Biography

Pu Cao is a forth-year Ph.D. student studying at Beijing University of Posts and Telecommunications (BUPT) under the supervision of Prof. Qing Song and Dr. Lu Yang. He is now interested in Computer Vision and am currently working on Visual Synthesis and Multimodal Large-language Model.

Interests

- Visual Generation

- Multimodal Large Language Models

- Embodied AI

Education

PhD in Artificial Intelligence, 2022

Beijing University of Posts and Telecommunications

BSc in Information and Computational Science, 2018

University of Science and Technology Beijing

News

- 2025.11 One paper is accepted by AAAI 2026 (oral).

- 2025.01 One paper is accepted by CVPR 2025.

Publications

Browse the highlights below or view the complete publications archive.

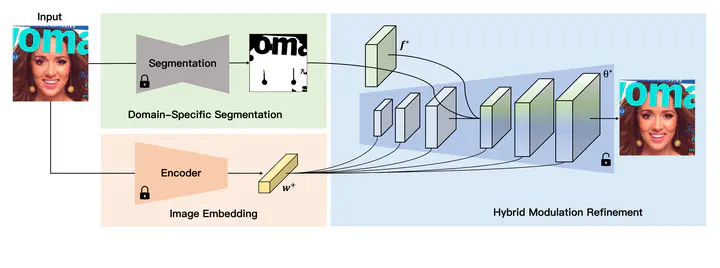

What Decreases Editing Capability? Domain-Specific Hybrid Refinement for Improved GAN Inversion

WACV 2024

.Editing capability decreases ineivitably in previous refinement methods, (e.g., PTI, HFGI, and SAM). In this work, we explore the idea of “divide and conquer” to address this problem. We combine two mainstream refinement mechanisms (i.e., weight ande feature modulation) and achieve extroadinary inversion and editing results.

Projects

A Diffusion training toolbox based on diffusers and existing SOTA methods, including Dreambooth, Texual Inversion, LoRA, Custom Diffusion, XTI, ….

A collection of resources on controllable generation with text-to-image diffusion models.

A GAN inversion toolbox based on PyTorch library. We design a unified pipeline for inversion methods and conduct a comprehensive benchmark.

Service

Conference & Journal Service

Conferences

- ICLR 2026

- CVPR 2025/2026

- ICCV 2025

- ECCV 2024 (Outstanding Reviewer)

- WACV 2024/2025

Journals

- TPAMI

- TIP

- TCSVT

- TMM

- TNNLS

Contact

- caopu@bupt.edu.cn

- No.10 Xitucheng Road, Haidian District, Beijing, China